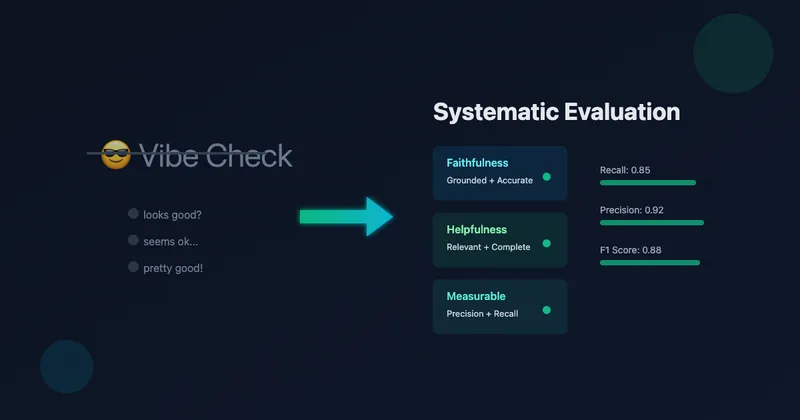

Beyond the Vibe Check: A Systematic Approach to LLM Evaluation

Stop relying on gut feelings to evaluate LLM outputs. Learn systematic approaches to build trustworthy evaluation pipelines with measurable metrics, proven methods, and production-ready practices. A practical guide covering faithfulness vs helpfulness, LLM-as-judge techniques, bias mitigation, and continuous monitoring.