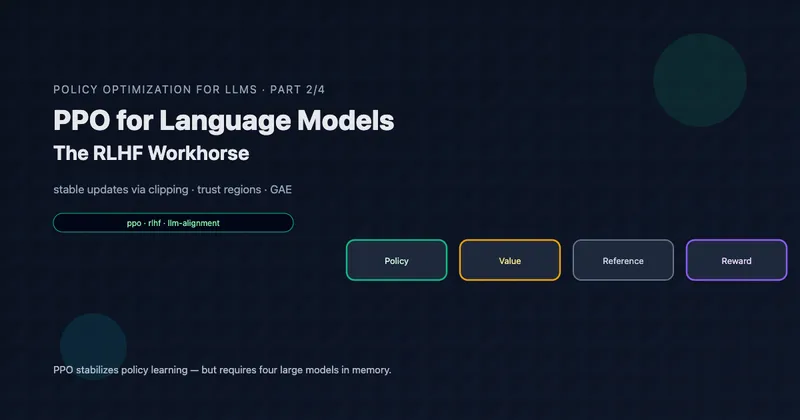

PPO for Language Models: The RLHF Workhorse

Deep dive into Proximal Policy Optimization—the algorithm behind most LLM alignment. Understand trust regions, the clipped objective, GAE, and why PPO's four-model architecture creates problems at scale.

Series

Read article Policy Optimization for LLMs: From Fundamentals to Production